- Home

- Symmetry Blog

- A Comprehensive Guide to Video Codec Design

A Comprehensive Guide to Video Codec Design

Wednesday, October 8, 2014

We bring together some of the concepts and examine the issues faced by designers of video codecs and systems that interface with video codecs. Key issues include interfacing (the format of the input and output data, controlling the operation of the codec), performance (frame rate, compression, quality), resource usage (computational resources, chip area), and design time. This last issue is important because of the fast pace of change in the market for multimedia communication systems. A short time-to market is critical for video coding applications. We discuss methods of streamlining the design flow.

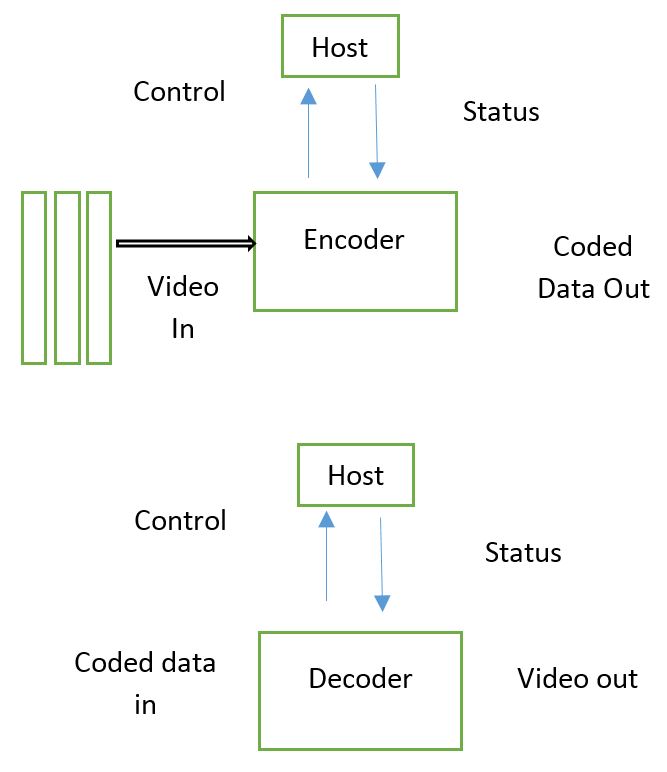

The Main Interfaces to a Video Encoder and Video Decoder

- Encoder input: frames of uncompressed video (from a frame grabber or other source); control parameters

- Encoder output: compressed bit stream (adapted for the transmission network)

- Decoder input: compressed bit stream; control parameters

- Decoder output: frames of uncompressed video (send to a display unit); status parameters

A video codec is typically controlled by a host application or processor that deals with higher-level application and protocol issues.

Video In/Out

There are many options available for the format of uncompressed video into the encoder or out of the decoder. We list some examples here.

The above block diagram shows a typical flow of encoding and decoding in a video design.

The above block diagram shows a typical flow of encoding and decoding in a video design.

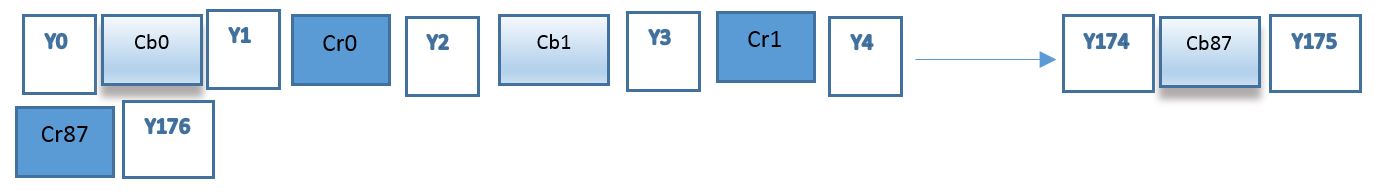

Below is a typical video line by line flow and luminance processing.

YUY2: The structure of this format is a sample of Y (luminance) data is followed by a sample of Cb (blue color difference), a second sample of Y, a sample of Cr (red color difference), and so on. The result is that the chrominance components have the same vertical resolution as the luminance component but half the horizontal resolution. For example the luminance resolution is 176 x 144 and the chrominance resolution is 88 x 144.

YV12: The luminance samples for the current frame are stored in sequence, followed by the Cr samples and then the Cb samples. The Cr and Cb samples have half the horizontal and vertical resolution of the Y samples. Each color pixel in the original image maps to an average of 12 bits (effectively 1 Y sample, i Cr sample and Cb sample), hence the name ‘W12’. This allows a frame stored in this format, with the luminance array first followed by the half-width and half-height Cr and Cb arrays.

There are separate buffers for each component (tY, Cr, Cb). The codec is passed by a pointer to the start of each buffer prior to encoding or decoding a frame. As well as reading the source frames (encoder) and writing the decoded frames (decoder), both encoder and decoder are required to store one or more reconstructed reference frames for motion-compensated prediction. These frame stores may be part of the codec (e.g. internally allocated arrays in a software codec) or separate from the codec (e.g. external RAM in a hardware codec).

Memory bandwidth may be a particular issue for large frame sizes and high frame rates. For example, in order to encode or decode video at ‘television’ resolution (25 or 30 frames per second) the encoder or decoder video interface must be capable of transferring 216 Mbps. The data transfer rate may be higher if the encoder or decoder stores constructed frames in memory external to the codec. If forward prediction is used, the encoder must transfer data corresponding to three complete frames for each encoded frame. If motion estimated compensation (for example, during MPEG-2 B-picture encoding) is used, the memory bandwidth is higher still.

Coded Data In Out

Coded video data is a continuous sequence of bits describing the syntax elements of coded video, such as headers, transform coefficients and motion vectors. If modified coding is used, the bit sequence consists of a series of variable-length codes (VLCs) packed together; if arithmetic coding is used, the bits describe a series of fractional series of data elements. The sequence of bits must be mapped to a suitable data unit for transmission transport, for example:

1. Bits: If the transmission channel is capable of dealing with an arbitrary number of bits, no special mapping is required. This may be the case for a dedicated serial channel is unlikely to be appropriate for most network transmission scenarios.

2. Bytes or words: The bit sequence is mapped to an integral number of bytes (8 bits) or words (16 bits, 32 bits, 64 bits, etc.). This is an appropriate form for any storage or transmission scenarios where data is stored in multiples of a byte. The end of the sequence may require to be padded in order to make up an integral number of bytes.

3. Complete coded unit: Partition the coded stream along boundaries that make up coded units within the video syntax. Examples of these coded units include slices (sections of a coded picture in MPEG-l, MPEG-2, MPEG-4 or H.264+), GOBS (groups of blocks, sections of a coded picture in H.261, H.263, or H.264) and complete coded pictures. The integrity of the coded unit is preserved during transmission, for example by placing each coded unit in a network packet.

The 8 Design Goals for a Software Video Codec

A real-time software video codec has to operate under a number of constraints, perhaps the most important of which are computational (determined by the available processing resources) and bit rate (determined by the transmission or storage medium). Design goals for a software video codec may include:

1. Maximize encoded frame rate a suitable target frame rate depends on the application, for example, 12-15 frames per second for desktop video conferencing and 25-30 frames per second for television-quality applications.

2. Maximize frame size (spatial dimensions).

3. Maximize ‘peak’ coded bit rate. This may seem an unusual goal since the aim of a codec is to compress video: however, it can be useful to take advantage of a high network transmission rate or storage transfer rate (if it is available) so that video can be coded at a high quality. Higher coded bit rates place higher demands on the processor.

4. Maximize video quality (for a given bit rate). Within the constraints of a video coding standard, there are usually many opportunities to ‘trade off’ video quality against computational complexity, such as the variable complexity algorithms.

5. Minimizes delay (latency) through the codec. This is particularly important for applications (such as video conferencing) where low delay is essential.

6. Minimize compiled code and/or data size. This is important for platforms with limited available memory (such as embedded platforms). Some features of the popular video coding standards (such as the use of B-pictures) provide high compression efficiency at the expense of increased storage requirement.

7. Provide a flexible API, perhaps within a standard framework such as DirectX

8. Ensure that code is robust (i.e. it functions correct for a video sequence, all allowable coding parameters and under transmission error conditions), maintainable and easily

Upgradeable (for example to add support for future coding modes and standards.

The 6 Design Goals for a Hardware Video Codec

Design goals for a hardware codec may include:

1. Maximize frame rate.

2. Maximize frame size.

3. Maximize peak coded bit rate.

4. Maximize video quality for a given coded bit rate.

5. Minimize latency.

6. Minimize pin count area, on-chip memory and/or power consumption when designing with dedicated chips.

Summary

The design of a video codec depends on the target platform, the transmission environment, and the user requirements. However, there are some common goals and good design practices that may be useful for a range of designs. Interfacing to a video codec is an important issue, because of the need to efficiently handle a high bandwidth of video data in real time and because flexible control of the codec can make a significant difference to performance and design. There are many options for partitioning the design into functional blocks and the choice of partition will affect the performance and modularity of the system. A large number of alternative algorithms and designs exist for each of the main functions in a video codec. A good design approach is to use simple algorithms where possible and to replace these with more complex, optimized algorithms in performance-critical areas of the design.

Comprehensive testing with a range of video material and operating parameters is essential to ensure that all modes of codec operation are working correctly.

For more information about video codecs, call Symmetry Electronics at (310) 536-6190, or contact us online.